Getting Started

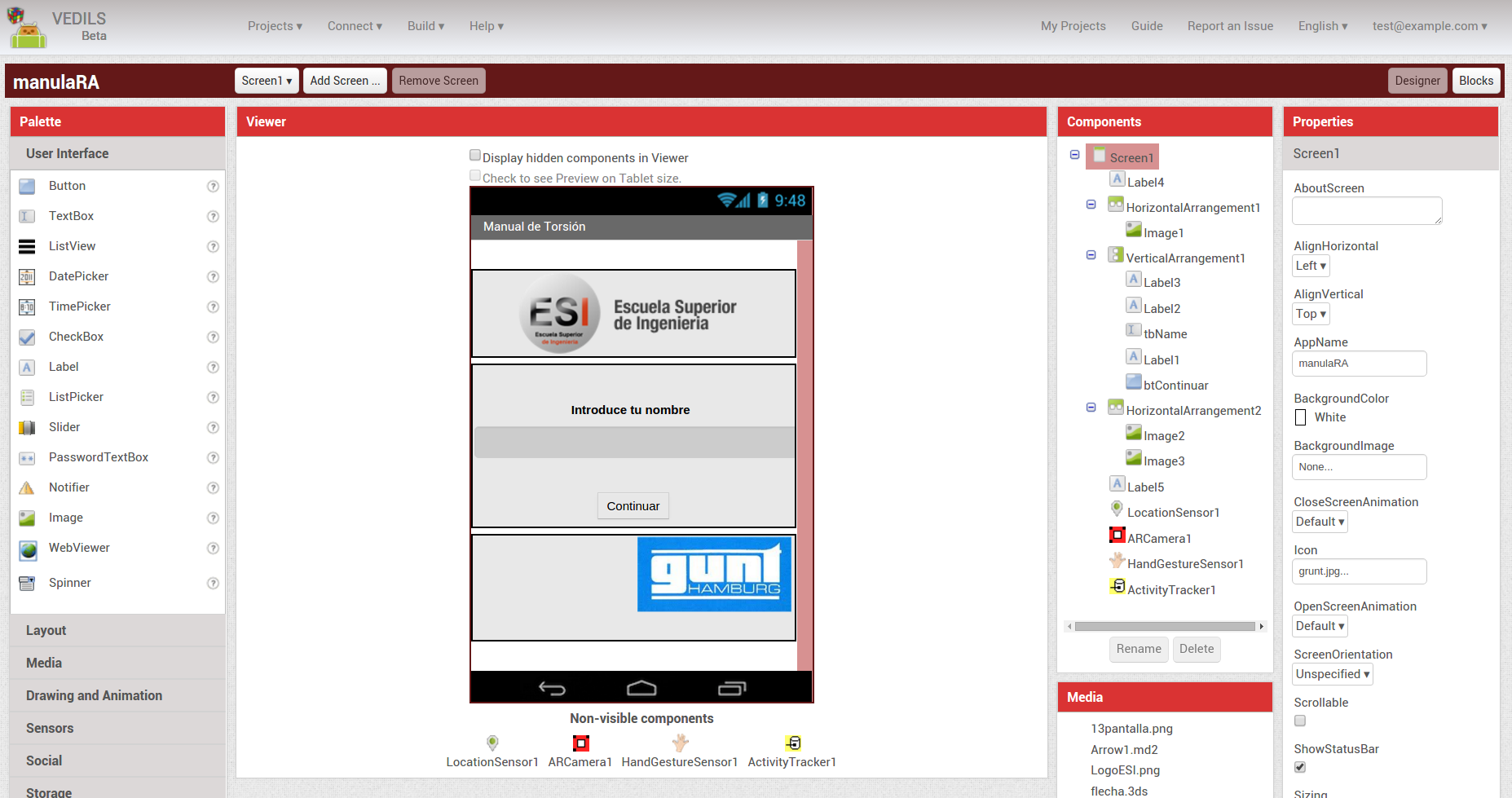

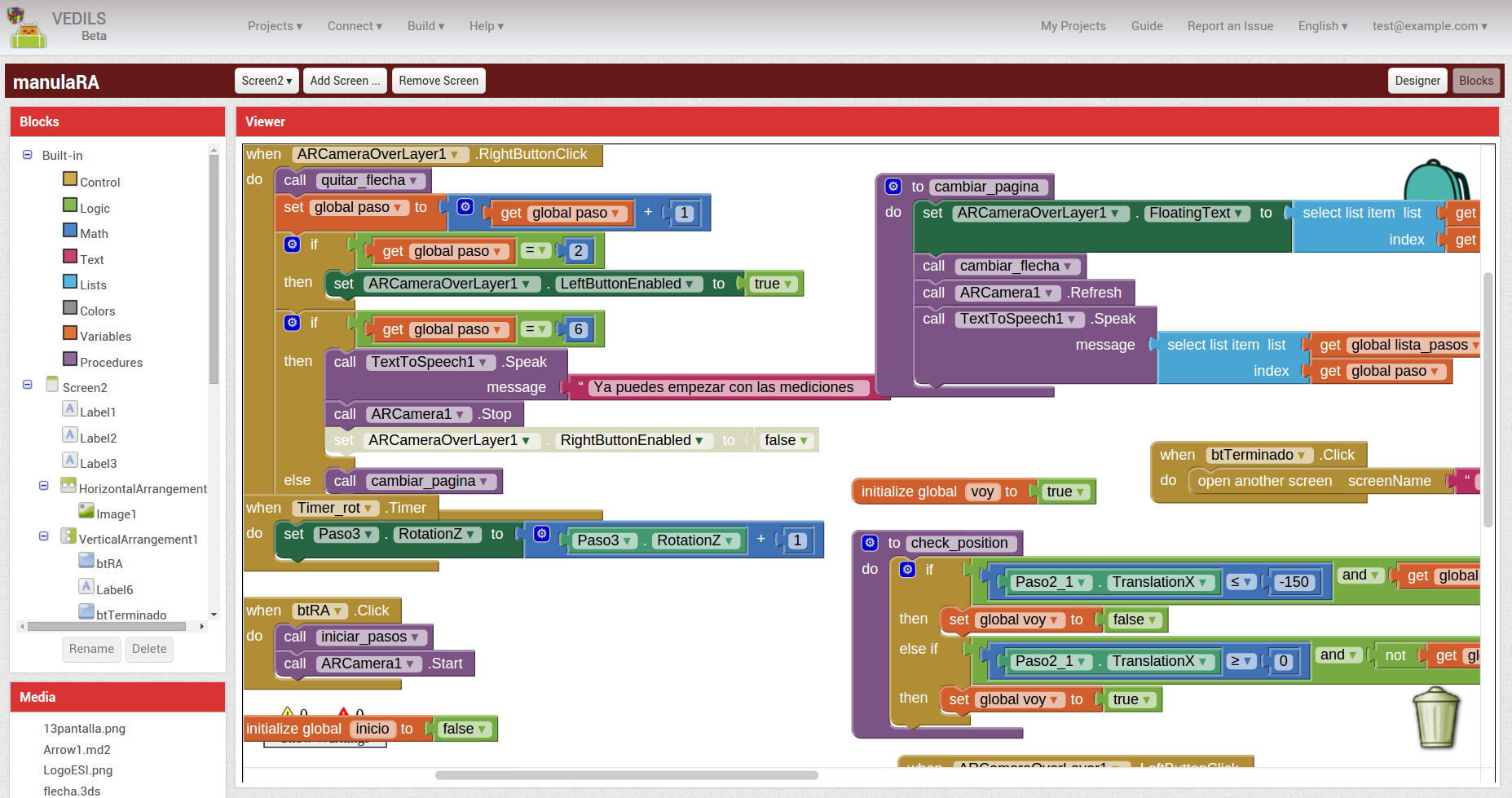

To work with VEDILS you only need a web browser (we recommend Google Chrome) and then access the website. VEDILS is composed of two parts: one for the app design [Figure 1], where we drag the elements from the toolbox (that can be configured later in the settings tab), and another called blocks [Figure 2] where we can define the logic behind the app, by using a visual language.

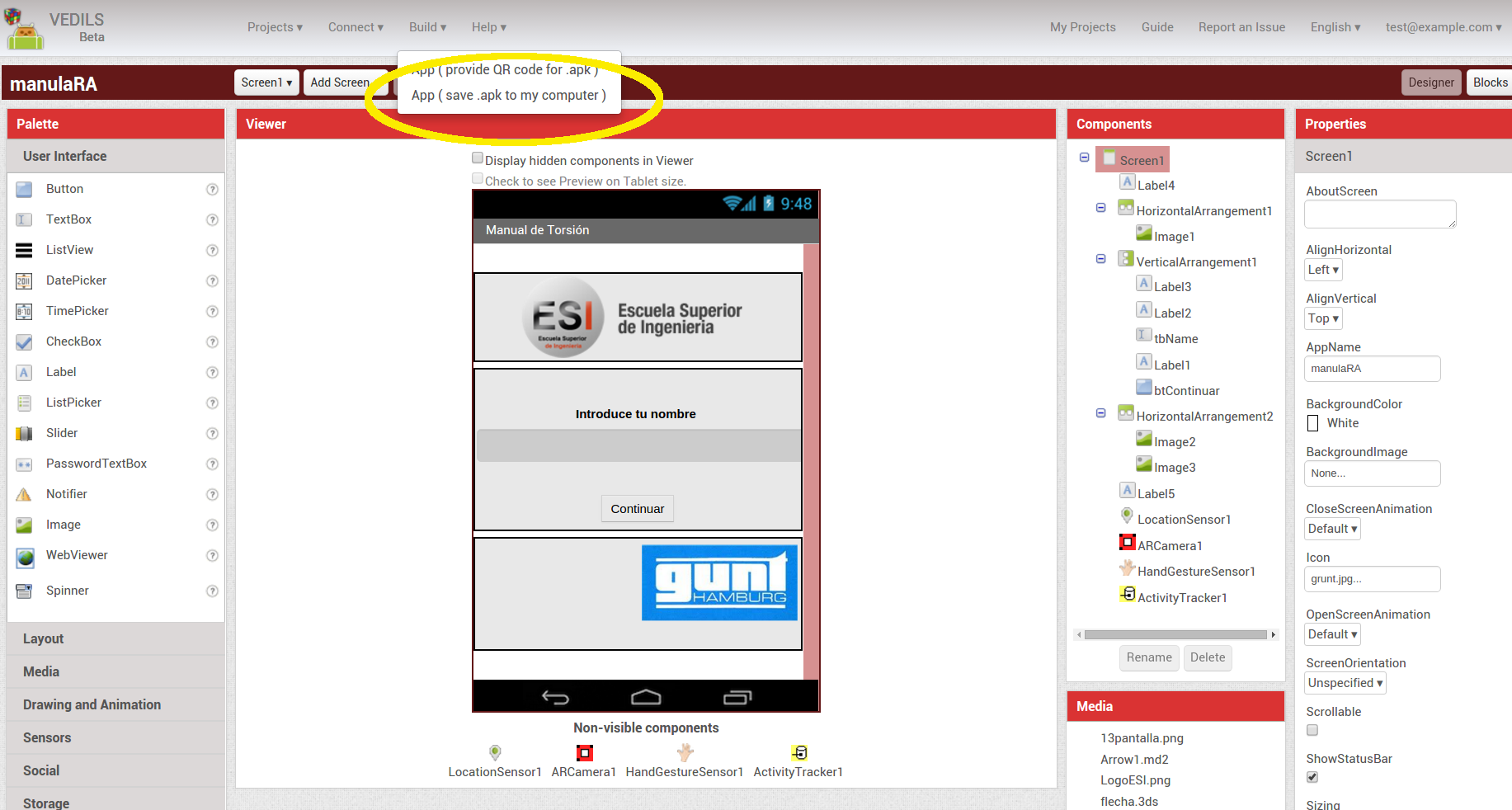

Once we have developed our app, we must generate the .apk file in order to install it on our Android mobile devices. To do so [Figure 3] we first need to click on the option “Build - App (save .apk to my computer)”. Once we have copied the .apk file on our mobile device we only need to open the file to install the app. Don’t forget that our device must be configured to install third-party applications. For further information you can watch the following video.

>